Before ChatGPT, there was cold silence. How early AI dreams froze and thawed?

Artificial intelligence is in its moment now, but it has not always been like this. We have seen in our previous blog how Artificial Neural Networks (ANN) were inspired by our Brain. Though that was not the end of the story, Early AI had its struggles, it’s has seen its spring when there was a lot of hope and enthusiasm, and then winter time when all hopes shattered, AI almost got ignored, but even at a hard time, it kept on growing non-stop regardless of all circumstances. It was full-on Bollywood-level drama.

So why should you read this blog? If you are learning AI, knowing all the struggles behind, it forces me to realize how lucky we are to be born in this era of new beginnings, whatever happens in the future either AI starts to rule us or we go interstellar, we are the witness of all that, and knowing the history behind it completes the story.

So now let’s time travel back to 1943.

The AI Spring (1943–1969)

McCulloch-Pitts Neuron: AI’s First Spark (1943)

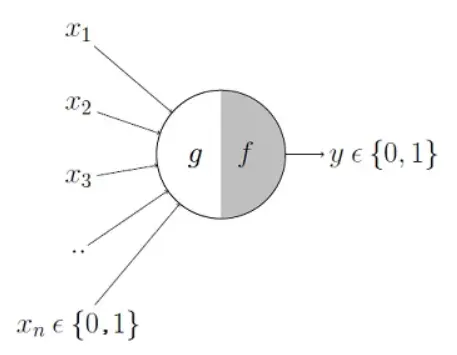

Warren Sturgis McCulloch American neurophysiologist, and Walter Harry Pitts, Jr., an American logician, were trying to understand how our brain makes decisions. They proposed a simplistic model of a neuron.

According to the model, it takes a list of binary inputs. Eg., Is it raining, do I have money, do I have time and other similar binary inputs where yes could be 1 and no could be 0. and after adding all the inputs, if it crosses a threshold, the output would be either of 1 or 0 showing 2 classes of binary outputs.

Let’s try to understand this via the following table. Assume we trying to understand If I will go out for snacks. There could be multiple things that could influence my decision, but for the sake of simplicity we are taking only 3:

1) Is it raining now? { Yes: 1, No : 0 }

2) Do I have money? { Yes: 1, No : 0 }

3) Do I have time? { Yes: 1, No : 0 }

Then, we have g(x), which sums up all the inputs.

g(x) = x1 + x2+ x3

Now, let’s assume 2 is our threshold, so if the g(x) is greater than 2, then f(g(x)) will return 1, which means I will go out, and 0 otherwise

| \[ x_1 \] | \[ x_2 \] | \[ x_3 \] | \[ g(x) = \sum_{i=1}^{3} x_i \] | \[ f(g(x)) \] | \[ y \] |

|---|---|---|---|---|---|

| 1 | 1 | 1 | 3 | 1 | yes, I will go out |

| 1 | 1 | 0 | 2 | 0 | No, I wouldn't go out |

| 0 | 1 | 1 | 2 | 0 | No, I wouldn't go out |

The Perceptron: First Steps Toward Machine Learning (1957–1958)

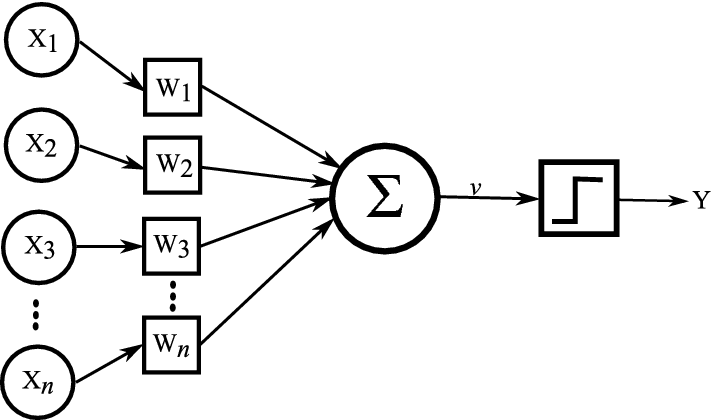

Then another American psychologist Frank Rosenblatt came into the picture with his improved neuron model, named Perceptron. It’s similar to the previously known McCulloch-Pitts Neuron, but now all the inputs are multiplied by their corresponding weights. The idea behind it was, that not all situations have the same influence on our decision. for example, having enough money has more influence over if I have the mood to go out. So, keeping these into consideration Dr. Frank suggested that instead of directly adding all the input we should multiply them with some weights, depending on how much they affect our decision.

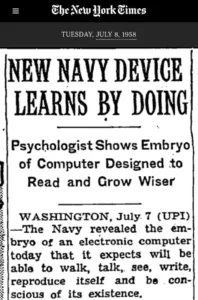

The idea of perceptron became a big hit for the time, as people speculated it as Artificial Intelligence was finally at their grasp. that could do all kinds of stuff that we can think of, Translate Languages, Make abstract decisions, Recognize speech, and whatnot. Some Popular magazines described Perceptron as an Electronic Brain, that could replace humans. The US Navy poured 1 Million Dollars into Perceptron development (that’s about 10 Million Dollars now), believing it would lead to machines with human-like thinking abilities. This level of Government support gave the public the impression that human-level AI was just around the corner, and there was more public and private funding. which caused an AI Spring.

The Navy revealed the embryo of an electronic computer today that it expects will be able to walk, talk, see, write, reproduce itself and be conscious of its existence.

- The New York Times (July 8, 1958)

Multilayer Perceptrons: A Leap Forward (1965–1968)

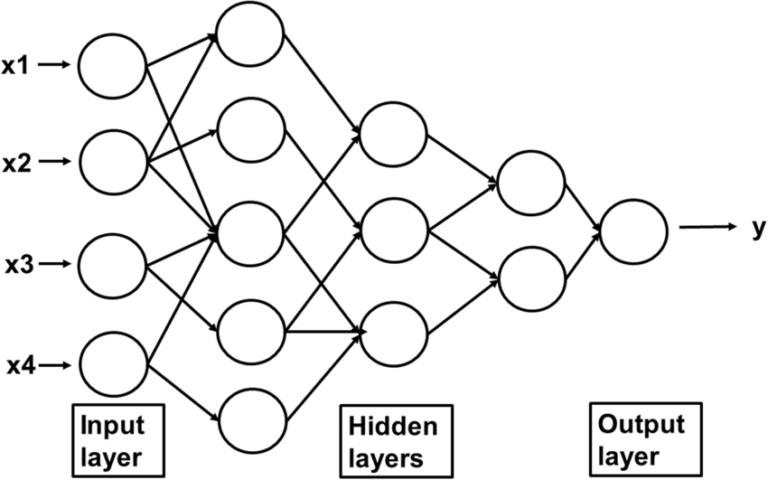

Around 1965 a Soviet and Ukrainian mathematician, Alexey Ivakhnenko, and his group published the group method of data handling (GMDH), It was one of the first deep learning methods that used a multilayered neural network, each layer containing multiple perceptrons interconnected throughout layers.

We Could say this was one of the very first drafts of Deep Neural Networks, that we use today. Although this didn’t get much popularity at that time.

Similarly, there were multiple research works kept ongoing for the next 12-13 years in this springtime of AI. Too much Enthusiasm, too much hope. But then…

The Shift Toward AI Winter

Minsky & Papert’s Critique: A Cold Wind Blows (1969)

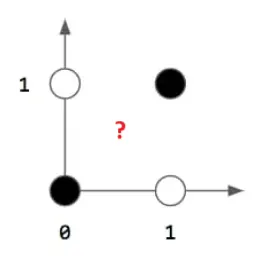

Perceptrons a highly influential book published by Marvin Minsky and Seymour Papert, outlines the perceptrons, showing their limitations. While we were highly positive about the future of perceptrons, hopeful that soon we could model any real-world scenario that could give us very close to actual results if given enough exemplary inputs. But all this enthusiasm was shattered when the book pointed to a problem that we can train a perceptron to give correct results for any linearly separable data, but it fails for any non-linear dataset. They explained how it can’t even perform on a simple XOR operation.

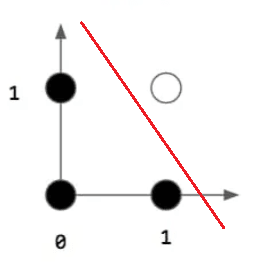

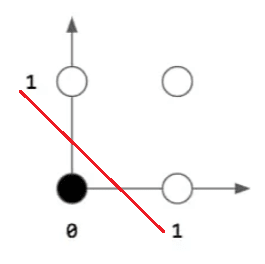

Let’s try to understand this by comparing a perceptron’s output for AND, OR, and XOR datasets. But if you are new to these operators, let me just give you a brief, all three 3 are logical operators, that work with Boolean (here 1 means true and 0 means false). AND operation simply means it will return true, only if all the inputs are true as well. OR returns true if any of the input is true. Now Coming to XOR, here it gets interesting, it’s short for ‘exclusive OR’. it will work as OR works just excluding the case where all the inputs are true, in other words, it will return true only if inputs are different. Which is problematic for a perceptron to solve. Why?? let’s try to get a geometric intuition of it.

AND

| Input 1 | Input 2 | Output |

|---|---|---|

| 1 | 1 | 1 |

| 1 | 0 | 0 |

| 0 | 1 | 0 |

| 0 | 0 | 0 |

OR

| Input 1 | Input 2 | Output |

|---|---|---|

| 1 | 1 | 1 |

| 1 | 0 | 1 |

| 0 | 1 | 1 |

| 0 | 0 | 0 |

XOR

| Input 1 | Input 2 | Output |

|---|---|---|

| 1 | 1 | 0 |

| 1 | 0 | 1 |

| 0 | 1 | 1 |

| 0 | 0 | 0 |

So in the graphs, we can see that all the white dots represent trues (1) and black dots represent false (0). The red line is the boundary line drawn by a perceptron. For AND and OR operations it was able to find a line that separates all trues and falses. But for XOR, it’s not possible to find a single linear line that splits the data into true and false regions.

The AI Winter (till 1986s)

Marvin Minsky and Seymour Papert showed that if our data is not linear, then a single perceptron is not going to work. However, in their boo,k they also mentioned that this is true for single perceptrons only, if we use multiple perceptrons we may achieve our goals, even for non-linear data. But somehow, the 2nd part of the statement got lost, and the crowd only focused on the first half, which we had high hopes with perceptrons, but it can’t even solve simple functions like XOR, then how would it solve complex real-world problems? These thoughts led to big disappointment and authorities started de-funding the research work for Connectionist AI, which includes perceptrons as well. All these events triggered a long pause for the next 2 decades, which we also call the AI Winter.

AI Winter was the time when there was very low progress on Connectionist AI, although people were still interested and kept on working on Symbolic AI. If you are unaware of Connectionist AI and Symbolic AI, let’s take a quick look into this.

Fundamentally AI has 2 different paradigms:

- Symbolic AI (Good Old-Fashined AI – GOFAI)

It uses symbols and rules to represent knowledge and reasoning. We create explicit rules like ‘if-then’ logic and symbolic reasoning.

Example: IF raining THEN take_umbrella.

here IF and THEN are logical reasoning.

raining and take_umbrella are symbols, if 1st symbol is true, that means 2nd symbol is also true. - Connectionist AI (Neural Networks / Sub Symbolic AI)

It tries to mimic the human brain using artificial neural networks. it learns patterns from given examples rather than rules.

Examples: CNN, RNN, Transformers, etc.

Quiet Breakthroughs That Melted the Ice

Backpropagation Resurfaces

However, it’s hard to say, when was backpropagation technique was discovered since that was the time when multiple things were discovered and re-discovered, because of a lack of knowledge of works around the globe. Around 1982, Backpropagation was used for Artificial Neural Networks, for the first time by Paul Werbos.

Eventually, it got popularized by the works of David E. Rumelhart, Yann LeCun (Current Chief AI Scientist, at Meta as of February 2025), and others. Which we could say was a big achievement since we still use it to train our ANNs for deep learning models.

Universal Approximation Theorem

The seed planted by Alexey Ivakhnenko in the spring period now blooms again. This time improved version, named the Universal Approximation Theorem, around 1986. The theorem says ‘A multilayered network of neurons with at least one hidden layer, can be used to approximate any continuous function to any desired precision’. We we talk about more details in some other blog. Here let’s try to understand its effects on breaking the ice of AI Winter. So what this theorem essentially means is that now we can get output with any precisions for any function linear or non-linear with enough number of neurons/perceptrons. It was a big breakthrough, as it solved the issues pointed out by Marvin Minsky and Seymour Papert, about perceptrons only working for linear models, which was one of the main causes of AI Winter.

This theorem again pulled people’s attention back to Connectionist AI research works. Although theoretically, it was possible but due to a lack of computational power, handling all these neurons at once was very unstable. But as we continued to grow with time our Hardware improved as well, which enabled us to do more advanced work, and we were finally able to stabilize multilayered perceptrons. Although it took some time, now finally the winter is over.

Pingback: What is a Perceptron? A Complete Guide with Algorithm & Examples - Akash Das Codes

Pingback: Perceptron Learning Algorithm - Akash Das Codes